The Accounting Journal: speed isn’t what it used to be

11th August, 2015

Part 5 of MYOB’s 6-part series on the history of accounting – from its origins to today, and beyond. Check out Part 4 here.

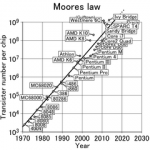

We always hear about how much faster computers keep getting. The oft-quoted “Moore’s Law” states capacity (speed and storage) per dollar roughly doubles every 1-2 years, and that exponential growth projection has held up – more or less – for 50 years now.

You don’t have to do the maths on that; you’re probably reading this on a device which can do it for you, billions of times per second, silently, efficiently and without error.

(There’s an old joke about how if automobiles experienced the same development curve as personal computers, today’s cars would cost $1.50, travel half a million kilometres on just a litre of petrol, and explode once a week killing everyone inside…)

Reliability aside – and to be fair, modern computers are a lot more reliable than the early models – there’s nothing else in human experience which compares to the rapid development of computer hardware over time. But simple graphs of mega-this or giga-that don’t tell the whole story.

Humble beginnings

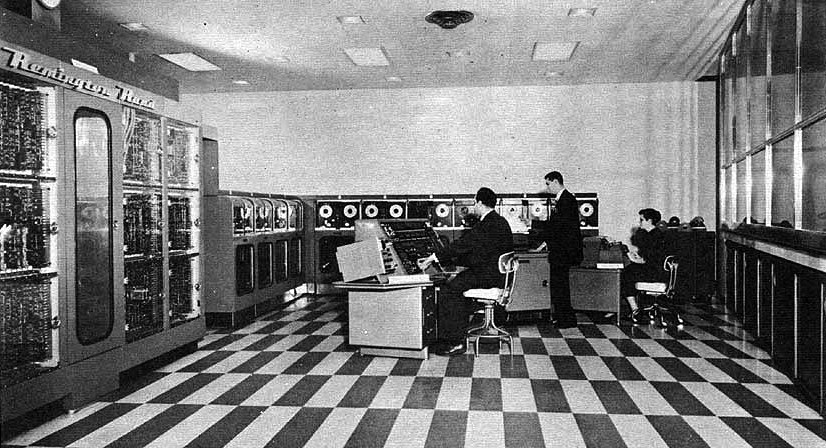

As we learned previously, the era of accounting software began just 60 years ago. General Electric purchased a Univac 1 from Remington-Rand (now Unisys), the first commercially-available product which we would consider a full-fledged computer. This means it was electronic rather than mechanical, and used stored programs rather than having to be rewired for every new job, among other features.

Early versions of a pioneering payroll application were first tested near the end of 1954. GE’s complex agreements with labour unions, coupled with the Univac’s processing speed – a mind-boggling 1,905 operations per second! – meant the job initially took over 40 hours to run. After a great deal of work spanning 1955 (into 1956, by some accounts), they got that time down to under 10 hours.

A $1.2 million computer which weighed 29 tons (and required more tons of equipment to keep it cool) consumed enough electricity to power a neighbourhood. But it still offered a serious cost savings over the armies of clerks required to perform and check the work by hand. This bottom line quickly became apparent to other businesses. Remington-Rand sold dozens of Univacs during the 1950s, and punch-card tabulator behemoth IBM was not far behind to enter, and ultimately take over, the market.

Faster, better, cheaper

For decades to come, however, computers remained expensive enough that their processor time was carefully rationed and billed. It wasn’t until the era of the microcomputer began in the mid-1970s that individual ownership of computers became economically feasible.

Again, after the initial wave of hobbyist-enthusiasts had reached the limits of simple games and novelties, one of the first business applications to come out for these newfangled gadgets was aimed at – you guessed it! – accounting tasks.

Accounting software became truly widespread in the 1980s (see last week’s article), reaching into businesses large and small, and the effects began to be visible in economic productivity figures.

From the Harvard Business Review magazine:

“…in 1954 Roddy F. Osborn predicted in [the Harvard Business Review] that GE’s use of the UNIVAC for business data processing would lead to a new age of industry: “The management planning behind the acquisition of the first UNIVAC to be used in business may eventually be recorded by historians as the foundation of the second industrial revolution; just as Jacquard’s automatic loom in 1801 or Taylor’s studies of the principles of scientific management a hundred years later marked turning points in business history.”

Skeptics may disagree

So if today’s computer chips are literally millions of times faster than just a decade or two ago, why does it still take five minutes to boot up a PC? Why isn’t everything instantaneous, for that matter? In a word: software.

Operating systems and applications get more complex all the time, as available resources increase to allow more features to be added. And methods which make things more efficient for developers – which make it possible for a given team to produce and test more functionality, on a broader variety of platforms, in a given timeframe – also can mean that the resulting software runs with less efficiency.

In other words, a modern computer can be working harder when sitting “idle” than an older model going flat out on its primary task. And that’s not entirely a bad thing!

Early software had to be efficient, custom-tuned for each machine and thrifty with every last bit of scarce memory, because there was no other choice. That’s one reason why it took so long, and cost so much, to create those early applications. Only governments and the largest corporations could afford not just the computer itself, but the maintenance and development costs.

Now that hardware is vastly more powerful and inexpensive, it makes no sense to work that way. Developers use powerful tools that help them create new features and better user interfaces much more quickly, despite the overhead these tools introduce, because the ever-faster hardware makes up for it. It’s like the difference between setting lead type on a printing press vs. using a word processor.

Now that hardware is vastly more powerful and inexpensive, it makes no sense to work that way. Developers use powerful tools that help them create new features and better user interfaces much more quickly, despite the overhead these tools introduce, because the ever-faster hardware makes up for it. It’s like the difference between setting lead type on a printing press vs. using a word processor.

And the faster hardware pays for itself in the time saved by these ever-improving user interfaces – not having to train employees for weeks or months to memorize arcane command sequences for each new feature.

If we were all still using software that was written by-the-byte and optimised by hand for each configuration, we’d still be waiting for anything resembling Windows, Office, or the world wide web for that matter…

Next week: Clouds on the horizon – the present and future of accounting